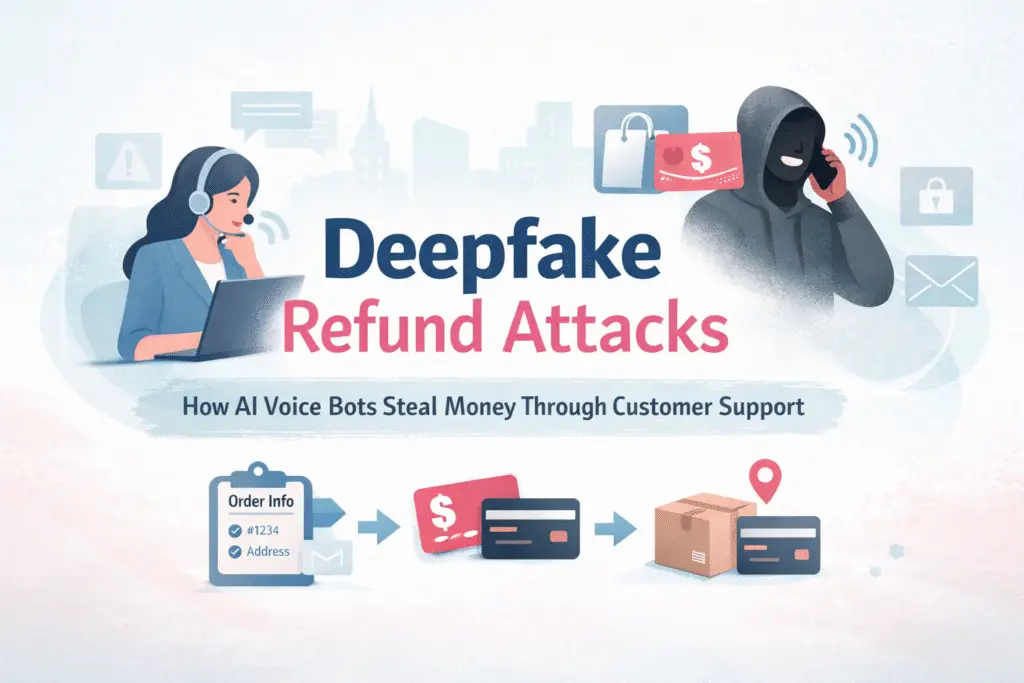

Deepfake refund attacks use AI voice bots to trick customer support into issuing refunds, store credit, or replacements.

Imagine it’s a busy evening in peak season.

A customer calls your support line. They sound stressed but polite. They know the order number, the shipping address, and the email on file. They say the package never arrived. They need a refund today because the outfit was for an event. They can’t access their email right now (traveling / phone lost / “email locked”). They ask you to refund to store credit or a different card.

Your agent wants to help. The queue is long. The request feels normal.

It may also be fraud, and the caller might not even be a real person. It could be an AI voice bot (or a deepfake voice) trained to sound human, emotional, and convincing.

Welcome to deepfake refund attacks: a fast-growing kind of fraud where attackers don’t “hack” your servers first. They hack your process.

This guide, designed for beginners, elucidates the nature of these attacks, their mechanisms, the reasons behind their success, particularly in the fashion and e-commerce industries, and the most effective measures you can implement to prevent them without overwhelming your customer support team.

What is a deepfake refund attack?

A deepfake refund attack is when a scammer uses an AI-generated voice (or a cloned voice) to impersonate a legitimate customer and trick your customer support team into issuing:

- a refund

- store credit / gift card

- a replacement shipment

- an account change (email/phone/address)

- a refund to a new payment method (payout diversion)

Important nuance: the “deepfake” part (fake voice) is not always the core trick. The core trick is social engineering using urgency, emotion, and “just enough account details” to push an agent into making an exception.

Therefore, even if your website is well-secured, your support workflow may become the quickest route to financial gain.

Why this is getting worse now

Deepfake refund fraud is rising because AI makes scams:

1) Cheap

A bot can place thousands of calls for little cost.

2) Fast

AI can call 24/7. Humans can’t.

3) Scalable

Attackers can run multiple “customers” in parallel, testing different scripts until they find a weak spot.

4) More believable

Modern voice generation can include pauses, frustration, politeness, and natural “um/uh” filler. It’s often believable enough to persuade an agent, especially during high-volume shifts.

That last point matters because customer support environments are built for speed and empathy, not adversarial verification.

Why fashion e-commerce is a perfect target

Fashion brands (especially D2C and luxury) have a few traits attackers love:

- Fashion brands, especially D2C and luxury, have high return rates compared to many other categories, which makes refund requests seem normal.

- “Wardrobing” behaviors, such as wearing something once and returning it later, blur the distinction between normal abuse and organized fraud.

- Store credit is common and often issued quickly to preserve customer experience.

- Peak-season pressure (launch drops, holidays, Eid, wedding season) increases urgency and urgency fuels exceptions.

- Loyalty programs and account benefits create extra value targets beyond refunds.

If your support team can issue credits, override return windows, or resend orders, your call center becomes a high-value financial system whether you intended it or not.

The real attack: process hacking

Most people hear “deepfake” and think the main risk is the voice being fake. In practice, the bigger issue is process design.

Customer support is optimized for empathy, quick resolution, low handle time, and high CSAT (Customer Satisfaction Score). Fraud prevention is optimized for verification, consistency, evidence, and controlled outcomes.

When a business focuses solely on quickly making the customer happy, attackers identify the vulnerabilities:

- refunds issued before returns arrive

- refunds redirected to a new method

- store credits issued without strong step-up verification

- contact details changed during the same call as a refund

- “exception culture” when someone is angry or upset

Deepfake refund attacks are essentially policy exploitation at scale.

How Deepfake Refund Attacks Work (step-by-step)

Here’s a realistic deepfake refund chain that fits fashion retail.

Step 1: Get “just enough” customer data

Attackers don’t need full identity takeover. They want enough details to sound authentic:

- order number (often visible in email)

- name + shipping address

- item name/size/color

- delivery date and courier

- sometimes last four digits of a phone number

Common sources include credential stuffing (reused passwords), phishing, email compromise, leaked customer lists, overshared receipts/screenshots on social media, data brokers, and OSINT.

Step 2: Start with low-friction support questions (recon)

Bots often open with “safe” questions to see what your agents reveal, such as:

- “Can you confirm the delivery status?”

- “What address is on file?”

- “Can you resend my receipt to this email?”

This is probing. They’re mapping your verification habits.

Step 3: Apply emotional pressure and urgency.

Common scripts include:

- “I need this today for an event.”

- “I’m traveling and can’t access my email.”

- “My phone is broken no OTP.”

- “I’m upset; I’ve been a loyal customer.”

The bot can deliver urgency consistently and politely, call after call.

Step 4: Ask for a high-value action (money move)

Typical goals:

- A) Refund to store credit / gift card (fast payout, hard to reverse, easy to resell)

- B) Refund to a different payment method (payout diversion)

- C) Replacement shipment (valuable for high-demand drops and limited items)

- D) Change email/phone + trigger reset (sets up full account takeover)

Step 5: Repeat until someone says yes

Attackers don’t need to defeat your best agent. They only need one approval. Bots will call repeatedly, rotate phone numbers, slightly modify the story, try different tones, and target busy shifts and new hires.

Think “call center credential stuffing,” but with conversation instead of passwords.

Red flags support agents can use (no fancy tools required)

You don’t need deepfake detection to catch most of these. You need consistent guardrails and a few clear warning signs.

Conversation red flags

- The caller refuses normal verification channels (“can’t access email/app/OTP”).

- They demand an exception and push urgency hard.

- They insist on a specific outcome (“refund to gift card only”).

- They repeat the same phrases even when challenged.

- Their story changes slightly across the call.

Operational red flags

- The customer has made multiple contacts regarding the same order within a short period.

- Multiple phone numbers are contacting support regarding the same account.

- The same interaction involves both a refund request and a change of contact information (email, phone, or address).

- Requests to refund before the return is received (especially for high-value items).

- Spikes in refund approvals by one agent or one team.

The following defense rules are designed to protect against Deepfake refund attacks:

These rules can stop a huge share of deepfake refund attacks quickly:

- The policy prohibits transferring refunds to a new payout method over the phone. Require confirmation via a logged-in session or a secure step-up process.

- There should be no changes to contact information or refunds made during the same interaction. Enforce a cooldown (24–72 hours) before refunds/credits after email/phone/address changes.

- Treat store credit/gift cards as cash. Add supervisor thresholds, caps, and confirmations to the existing email/app on file.

- Tie refunds to return validation for high-risk (“Stock Keeping Units”) SKUs (carrier scan/warehouse receipt) before releasing reimbursements.

- Start with, “Confirm through the channel you already trust.” Please send a secure confirmation link to the email we have on file. If the caller can’t access those, open a case and complete the refund after confirmation.

- Bots leave footprints. Add basic monitoring: contacts per order, contacts per account per day, refund approvals by agent (outliers), % refunds before return received, and % refunds issued within 72 hours of contact info changes.